The backtesting platform: The architecture and its requirements

It is evident that models and algorithms are the core of each algorithmic trading system. Designing and developing them requires an environment to test, validate and verify the performance and accuracy. These processes usually utilize historical data to learn the obvious and hidden market behavior patterns and apply them to their future decisions. These processes are called backtesting.

A perfect, fast, and flexible backtesting platform is essential for algorithmic trading product development. Many backtesting packages, libraries, or platforms can be found online. Although these tools cover most of the requirements for developing a trading system, they are usually too general to be used by firms or financial corporations. Hence, designing and developing a customized backtesting platform according to particular requirements is essential. In Eveince, we do our best to build our customized backtesting platform to target our necessities based on our technology development procedures. This article describes the architecture of our backtesting platform and its elements in detail.

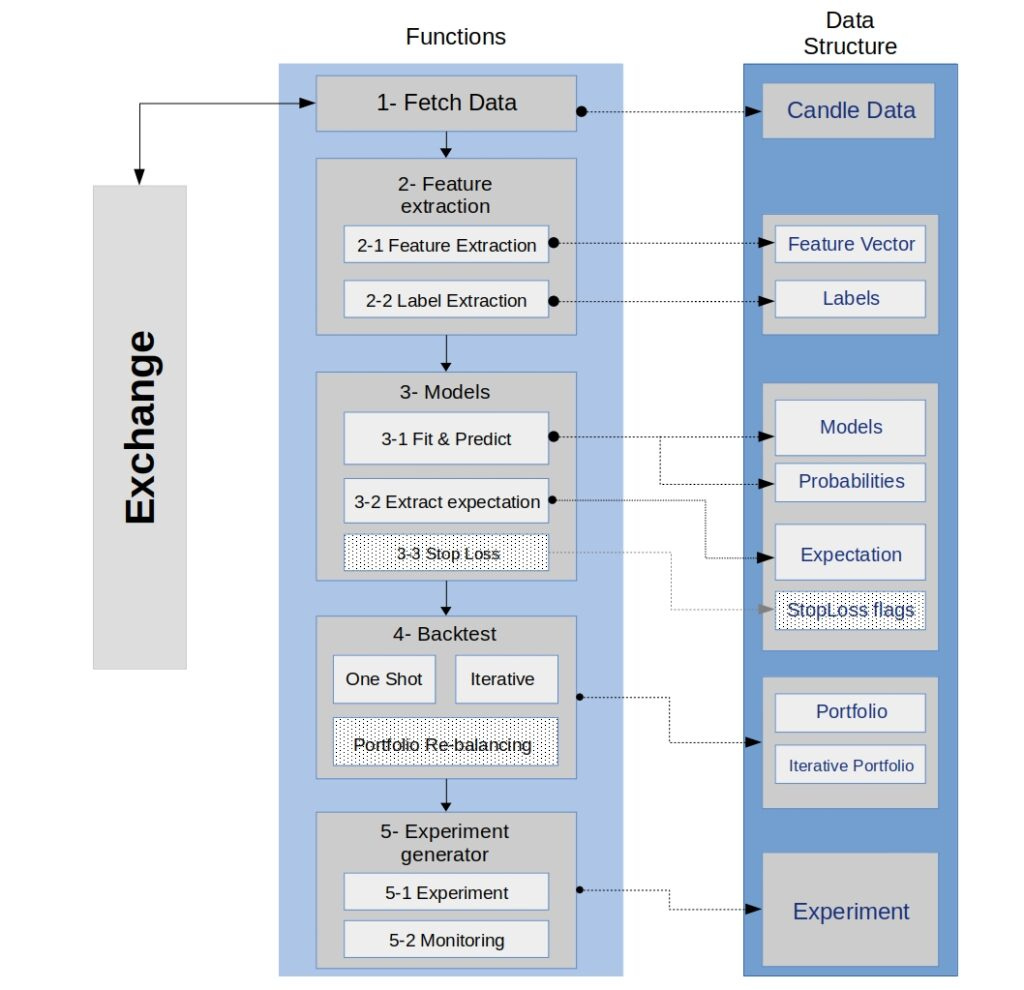

We design a multi-layer backtesting platform that makes each layer isolated logically as separated modules. This kind of architecture helps us segregate different parts, which makes the development process more optimized and easier. The only requirement that should be considered in this approach is defining standard inputs and outputs for each module or layer to make the communication between them easier and link the data effortlessly. In fact, regardless of the complexity of each layer, we can develop layers with different teams according to changes and new requirements. The multi-layer architecture is shown in figure 1.

As you can see, most of the layers consist of multiple sub-layers, which are submodules of a more extensive functionality. Like the modules that are connected logically, all the separated data are also associated logically with a time index, making it possible to merge different parts of data.

Layer one: Fetch Data

The exchanges often provide standard APIs for traders to get historical data. To build a backtesting platform, the priority is having access to price data with different time intervals and precise information. This layer implements the functions or methods to retrieve data from various exchanges through standard APIs and store them in the OLHCV candle price format. In this part, the user just needs to determine the start and end time of data, the list of symbols, and the time interval. While we fix the inputs and outputs format in this layer, we can change the inner APIs and change exchanges and logic. We have two different functions for fetching data, one of them is based on time range, and the other is based on the number of data points. More detail is shown in figure.2.

Layer two: Feature Extraction

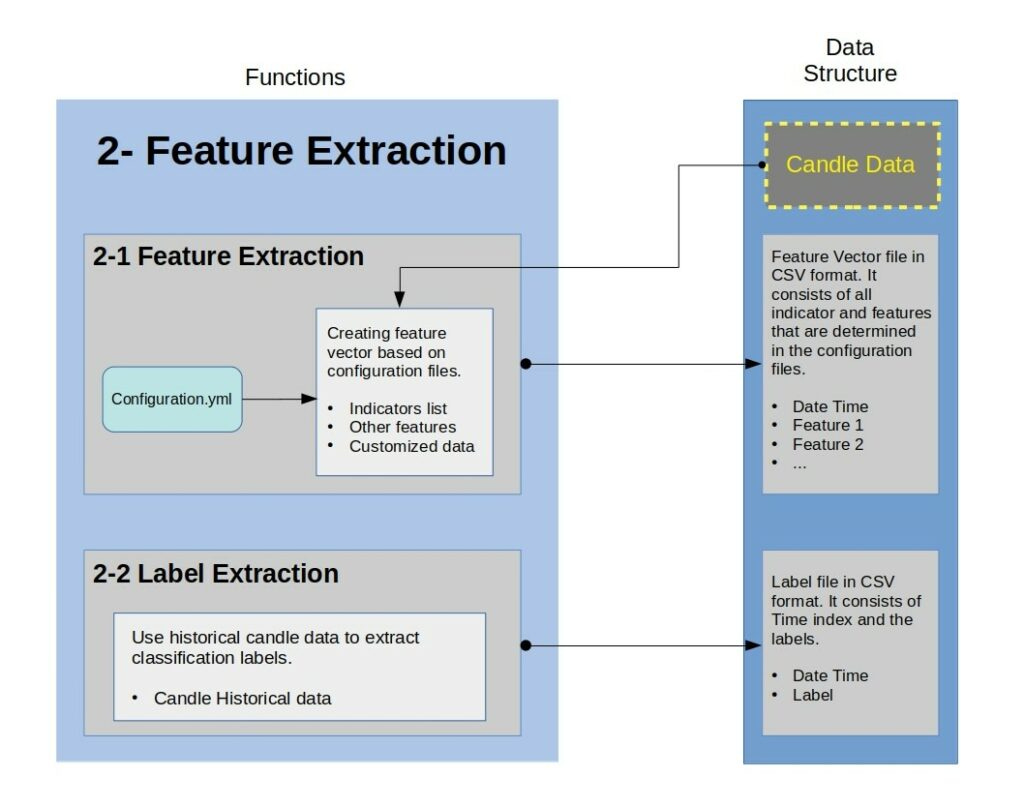

This layer is divided into two different modules. The first is feature extraction, and the second one is label extraction. We know that each machine learning model needs data as a feature vector to work. In Eveince, the data team aims to develop quantitative trading strategies with a special concentration on machine learning methods and models. Hence, the features or feature vectors are one of the most critical aspects of our works. This layer uses technical indicators, fundamental data, and augmented variables as features. We attempt to enrich our indicator library by adding the most useful technical indicators and designing new indicators to represent further aspects of price data into our indicators pool.

One profound thing about this layer is our creativity to design a config file describing the feature vector as a manual sheet. In this file, the features, their parameters, and their normalization methods are defined by the trader and making adding new features much more straightforward than other approaches.

The second sub-layer is related to labeling. All the classification problems, or generally all the supervised models, need labels to distinguish different classes to sort out the problem. We also need to determine labels if we decide to use supervised models in our problem definition scope. Sometimes the labels are directly calculated by the detailed data, but we usually use complicated labels, which discriminate the space more accurately. All the required methods for calculating the labels are implemented in this layer.

Finally, the inputs of this layer are raw price data as CSV files, and the outputs are the final feature vectors and labels, which are separately stored in CSV files. All the details of the feature extraction layer are shown in figure.3.

Layer three: Model Fit and Predict

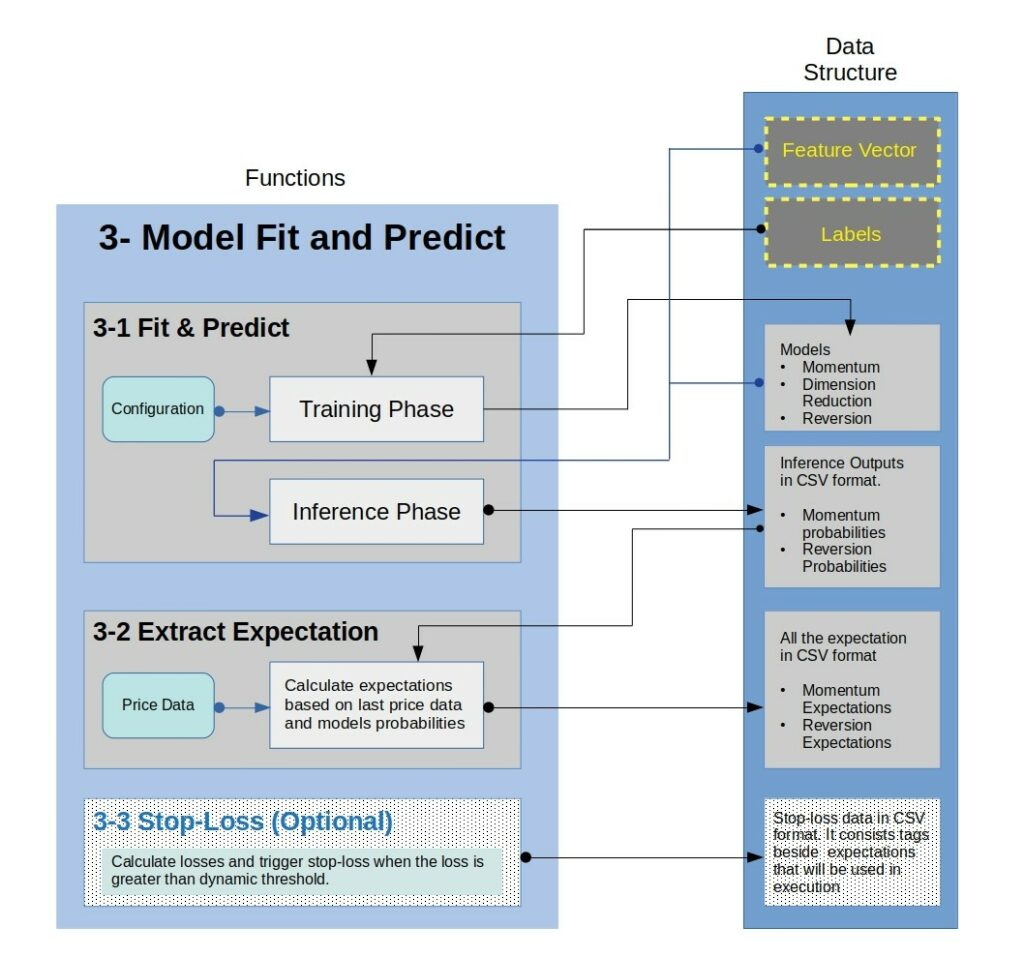

This layer consists of three super important sub-layers in our backtesting procedure.

In the first sub-layer, the activities for training and inferencing have been done thoroughly, and this is the most vital part of our platform. The way that we recognize patterns from feature vectors and how to analyze them quantitatively are determined in this step. For exploring new ideas or models, we just change the logic in these procedures. To run this sub-layer, we need to determine training and testing data, the time condition for backtesting, and the model’s parameters. The training phase should be executed when we are building new models, but the inferencing phase is always performed for backtesting. The outputs are the probabilities and confidence scores of inferences, and most of the time, we cannot use them directly for making final decisions. Based on this fact, we need another sub-layer that gets the inferences outputs and convert them into the expectations, which can be used as data for creating final orders. This layer is a mapping between inferences and the expectation of market behavior.

The last layer, called stop-loss, is used to add stop-loss into the execution based on fixed rules. It adds flags which indicate the time that stop-loss is triggered, and we should consider these time points in execution. The details of this layer are shown in figure.4.

Layer four: Execution

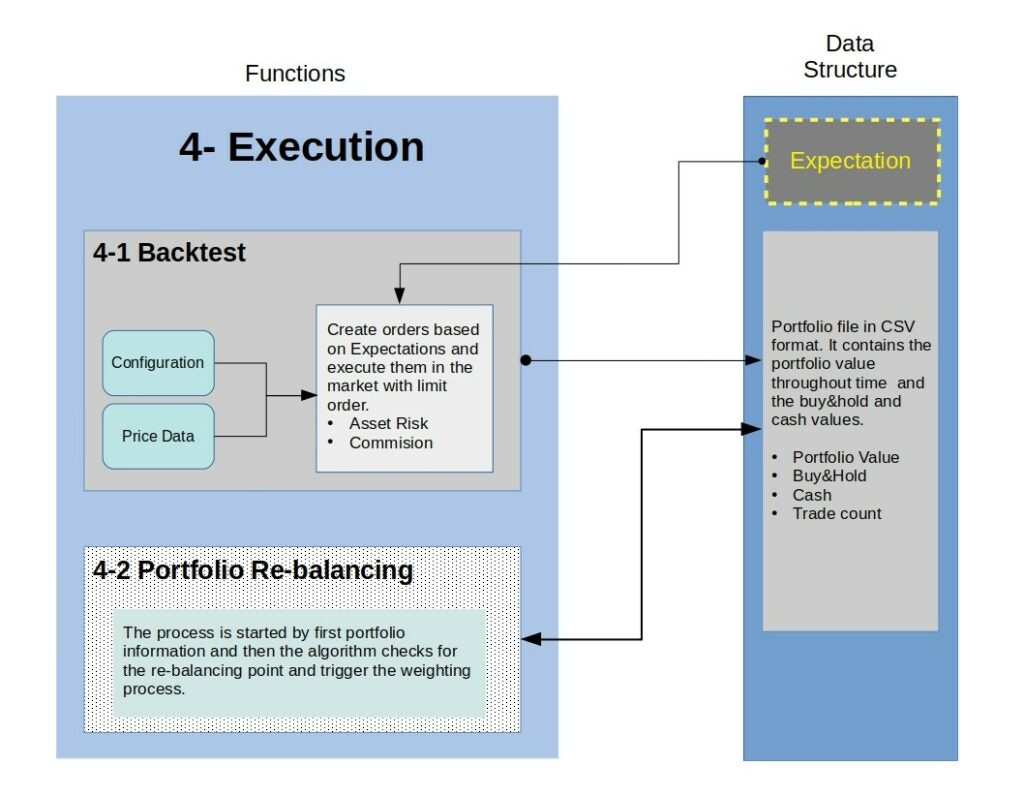

Execution is the primary layer for creating and executing orders which generate portfolios. It consists of two sub-layers which are execution and portfolio re-balancing. The first one uses the expectation values from the previous layer and converts them into executable orders in the exchange. Like other backtesting platforms, the portfolio values are calculated by simulating the execution without volume restriction. The execution can be long or short, and we support both by implementing spot and future execution methods. In this section, the function uses some parameters from the configuration file and also the price data for execution. Every order that will be executed in the simulation will update the portfolio value. It means according to the profit or loss from the trade and the commission that must be reduced from the asset based on the amount of the trade, the exact asset value should be calculated. In this section, we also manage risk with adjust the position size based on the risk that we can tolerate at that point by measuring the asset risk and the future expectation.

In the second sub-layer, which is optional, we re-balance the portfolio and reallocate assets according to the performance and risk. This layer needs one complete portfolio for the first iteration and then calculates the metrics point by point and triggers the re-balancing methods. In this layer, the execution process runs multiple times based on trigger points, generating a new portfolio each time.

The outputs are the portfolio values and other metrics calculated, such as trade count, buy and hold value, and cash amount. The details of this layer are shown in figure.5.

Layer five: Observing

This layer is developed to observe the backtesting results and the portfolio’s performance metrics. It consists of two sub-layer, and the first one is responsible for generating the experiment data for the visualizer segment. This layer divides the output into broker and individual symbols performance.

The second layer visualizes the experiment data with different charts and tables or graphs. We also have a comparator to compare the output and performance of multiple portfolios simultaneously.